When gearing up to run a test on your website it’s critical to have an understanding of the errors you may come across when analyzing the results of your experiment. Why? Because in statistical hypothesis testing, no test can ever be 100% decisive.

When running a website test, we seek out statistically significant results, meaning that the results of the test are not due to chance. The practical purpose of this is to allow for the results to be attributed to a specific cause (e.g. a change made to our site) with a high level of confidence.

When testing, marketers should aim for statistical significance at a P-value of 5%, which means that there is only a 5% chance that a test has produced incorrect results, and a confidence level of 95% that the results are correct. This is the threshold implied when “statistical significance” is used throughout this article.

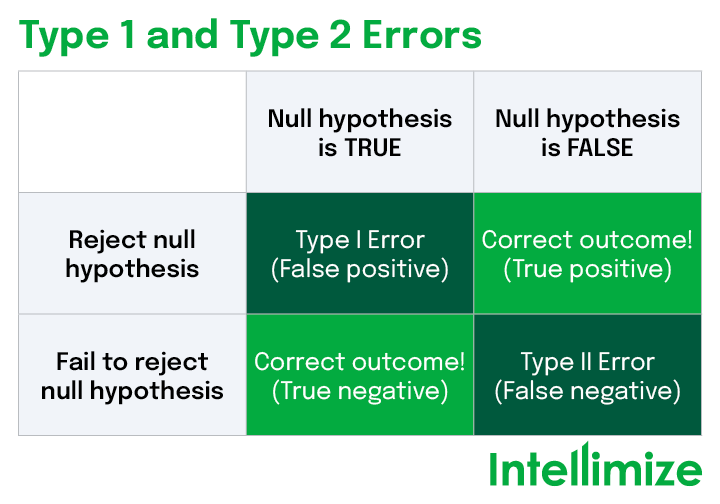

Even though hypothesis tests are considered to be reliable, because of statistical significance variance errors can still occur leading to false positives and false negatives. The two types of errors are called type 1 and type 2– keep reading to learn what these errors are and how to avoid them.

What is a type 1 error?

A type 1 error is when you reach a false positive aka when you reject your null hypothesis because you believe your test made a difference when it really didn’t. Sometimes a false positive can occur randomly (e.g. it falls in the 5% of statistical significance variance), or there may be another variable that you didn’t originally account for that affects the outcome.

Type 1 error example

You’ve chosen to run an A/B test on your ecommerce website over a period of time that overlaps with a winter holiday. Because online shoppers’ habits differ during this period of time, you may be led to believe that a certain variation is a winner. In reality however, had you run the test during a more steady period of time, the results may have shown little to no change.

How can I avoid a type 1 error?

Unfortunately, there is no way to completely avoid type 1 errors. That said, here are a few tips you can implement to reduce the likelihood of a type 1 error during your next website test:

- Increase your sample size. To achieve a significance level of 95% you’ll need to run tests for an increased amount of time and across many site visitors. Be mindful that, if you choose a lower statistical significance threshold (e.g. 90%), your likelihood of type 1 errors increases. Additionally, increasing your Minimum Detectable Effect (MDE) to reduce the sample size needed to reach statistical significance can mean you miss out on non-trivial performance improvements for the sake of achieving high significance in a reasonable amount of time.

- Be mindful of external variables. When running tests on your website, think about external variables that may impact the results of your test. As in the above type 1 error example, the results of the test were affected by the fact that the test was run during the holiday shopping season.

What is a type 2 error?

A type 2 error is essentially a false negative, meaning you’ve accepted the null hypothesis when there is a difference between the control group (null hypothesis) and the variation. This can occur when you don’t have a large enough sample size or your statistical power isn’t high enough.

Unlike a type 1 error, type 2 errors can have serious ramifications for your experimentation program. You’re not only missing out on learnings and valuable customer insights but, more importantly, a type 2 error could potentially send your testing roadmap in the wrong direction.

Type 2 error example

Let’s just say that you’re interested in running an A/B test on your B2B website to increase the number of demo requests your company receives. In the variation, you’ve chosen to change the color of the demo request button on your homepage from blue to green. After running the test for four days you see no clear winner and stop the test.

The following quarter you try the test out again, except this time you leave the test running for fourteen days, covering two full business cycles. Much to your surprise, this time around the green button is the clear winner!

What happened? Likely, the first time you ran the test you encountered a false negative because your sample size was not large enough.

How can I avoid a type 2 error?

Like type 1 errors, it is not possible to entirely eradicate the possibility of encountering a type 2 error in your website tests. But, there are ways to reduce the likelihood of type 2 errors, here’s how:

- Increase your sample size. As in the type 2 error example, you will need to run your tests for longer and across a larger audience to gather an adequate amount of data.

- Take big swings. Large changes—or the larger the expected impact on your conversion rate (i.e. the MDE)—lower the required volume to reach statistical significance and maintain high statistical power.

Understanding how these errors function in the world of statistical hypothesis testing will allow you to keep an informed and watchful eye over every website test your run. Happy testing!